0G's Data Availability Layer

Introduction

What if the ability to crypto fully on-chain wasn’t limited by inadequate data availability infrastructure? What if vast amounts of data could be stored on-chain, and used to run complex AI models that support any high-performance needs?

“0G DA” is 0G’s infinitely scalable data availability solution that solves all of these issues.

In this article we’ll provide an overview of:

- 0G DA’s novel architecture and data storage model, that in unison ensure extremely high data availability

- Implications for scalability of the Web3 industry

- Potential use cases

Existing DA Solutions Are Inadequate

Data Availability Layers (DALs) provide an efficient yet secure manner to publish data and to keep it available for anyone to verify. For example, Layer 2 rollups publish data that must be kept available, proving that the off-chain transactions were done correctly.

However, existing DALs cannot adequately support high-performance dApps or use cases such as on-chain AI.

Alternative solutions such as Celestia and EigenDA have been introduced, acting as DAL’s whereby data can be published more efficiently. They reduce associated DA costs and are seen as complementary to Ethereum’s upcoming danksharding.

Both have their respective challenges:

- Celestia: Data must be broadcasted to all consensus nodes (rather than broadcasted selectively) which restricts throughput to 10 MBps. This is extremely insufficient for a proliferation of Layer 2/3 networks and data-heavy use cases such as decentralized A.I. Other issues that exist (such as surrounding validator profitability or outdated code) may need significant updates moving forward.

- EigenDA: While EigenDA incorporates horizontal scalability for improved scalability, many performance bottlenecks exist. For example, validators are restricted and slowed down by the need to both maintain a full Ethereum node and interact with the staking smart contracts on Ethereum. The storage layer is also not adequately designed to support scenarios like decentralized machine learning (e.g. OPML), which require vast amounts of data.

0G DA is 0G’s infinitely scalable data availability solution that is built directly into 0G’s general-purpose data storage system 0G Storage.

Securely Storing Data for 0G Storage

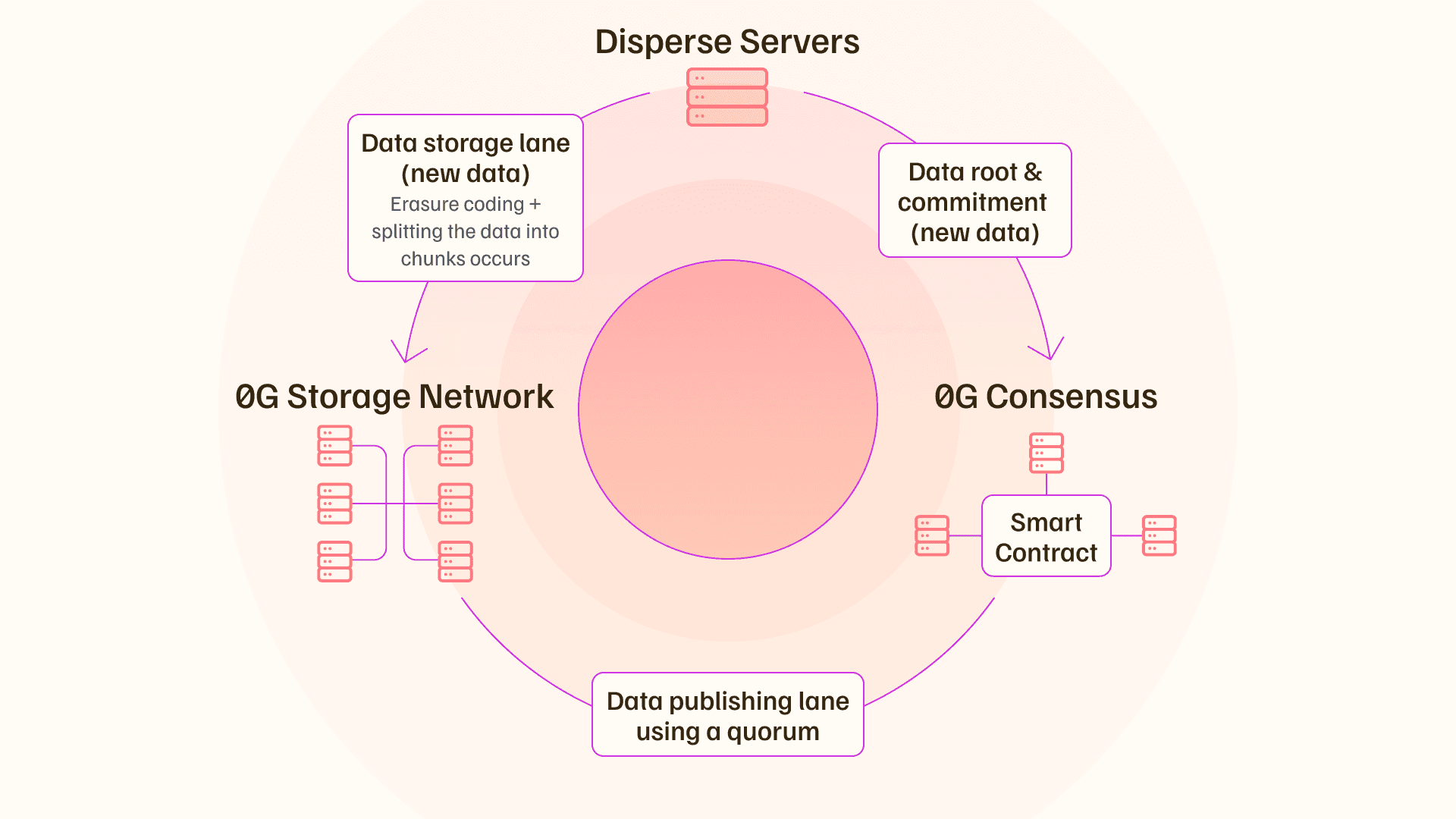

It’s worthwhile to first have an understanding of how data arrives in the system.

To store data in 0G’s system, it is erasure-coded which means that the data being stored is fragmented into redundant smaller pieces distributed across multiple storage locations. This enables fast recovery in the event of any storage node failures, and a merkle tree is then formed with this data. It’s submitted to 0G’s consensus layer (“0G Consensus”), which helps identify any changes to data while also ensuring fast data retrieval when needed.

Apart from being erasure coded, the data is split into “data chunks” that are then sent to various Storage Nodes. Storage Nodes maintain the 0G Storage Network, responsible for tasks such as storing and retrieving data chunks quickly and accurately and coordinating with 0G DA to confirm data availability.

0G DA’s Novel Architecture

To reiterate, there are 3 parts of 0G:

- 0G Storage: the general-purpose data system that is managed by Storage Nodes.

- 0G DA: the data availability system built on top of 0G Storage.

- 0G Consensus: 0G’s consensus network.

The architecture looks as follows:

Actual confirmation of data availability relies upon 2 workflows:

- The Data Publishing Lane: For data availability guarantees.

- The Data Storage Lane: For large data transfers to 0G Storage.

The Data Publishing Lane is critical to 0G’s data availability property and works by having the consensus network check the aggregated signatures of the corresponding Storage Nodes. This means that 0G’s Storage Nodes must reliably certify that the data truly exists in 0G Storage, which is verified by 0G’s consensus network.

This is extremely quick to do, as the Data Publishing Lane only requires a tiny bit of data flowing through the consensus protocol to avoid any bottlenecks.

0G takes a similar approach to EigenDA whereby an “honest majority” of a group of selected Storage Nodes must agree that the data is correct (in return for mining rewards). Unlike EigenDA, a Verifiable Random Function (VRF) is used to randomly select Storage Nodes to avoid any potential for collusion.

0G Consensus can then quickly verify data availability, at a rate 1,000x faster than Ethereum’s danksharding and 4x faster than Solana’s FireDancer.

Unparalleled Scalability with 0G DA

So far we’ve established that 0G Storage can store any type of data, and quickly prove data availability to 0G DA through the use of a randomly chosen quorum of Storage Nodes.

Where does the infinite scalability come from?

0G Consensus can be comprised of any arbitrary number of networks while relying upon the same set of validators that can simultaneously validate for all of them. For example, there could be 5 consensus networks, or 5,000, which are securely managed by the same set of validators using a process known as shared staking.

These validators would stake their assets on a primary network (which would likely be Ethereum), and any slashable event on a network would trigger a slashing back on the main network. When a validator receives incentives on a network that they are validating, they can burn their tokens to receive them on the main chain.

As the 0G ecosystem continues to grow, additional consensus networks can be added to infinitely scale the system.

Unlocking New Potential: Advanced Use Cases

Traditionally, the greatest benefit of a DA solution is having Layer 2s and Layer 3s publishing in a cost-efficient manner. With 0G, the benefits extend far beyond this.

Given that vast amounts of data can be stored in 0G Storage and quickly retrieved as needed means that entire AI models can be hosted on-chain with full data availability potential as needed.

Here’s how various parties benefit:

- L1s / L2s: These parties may use 0G’s AI models, or use 0G for data availability and storage. Partners include Polygon, Arbitrum, Fuel, Manta Network, and more.

- Decentralized Shared Sequencers: Sequencers are used to order L2 transactions before sending them to Ethereum, while shared sequencers allow for multiple rollups to share a single decentralized network of sequencers. Any DA solution, whether 0G’s or not, can use 0G to connect to stream data between all networks using a particular shared sequencer for efficient sequencing.

- Bridges: Given that networks can easily store their state using 0G, state migration is possible between networks, facilitating secure cross-chain transfers. For example, a network could use 0G DA to confirm that a user truly holds assets and has confirmed a transfer, then stream this to another network to quickly and securely bridge.

- Rollups-as-a-Service (RaaS): 0G can serve as a DA option and data storage infrastructure for RaaS providers like Caldera and AltLayer.

- DeFi: 0G’s quick and scalable DA may support highly efficient DeFi on specific L2s & L3s, due to fast settlement and storage. For example, storing order book data for high-frequency trading.

- On-chain Gaming: Gaming requires vast amounts of cryptographic proof-related data that needs to be reliably stored, on top of all regular metadata such as a given player’s assets, points, actions, and more.

- Data Markets: It makes the most sense that Web3 data markets store their data on-chain, which can be updated and queried quickly with 0G.

For now, 0G is heavily focused on the crypto AI market where there are many hurdles limiting progress in the space. 0G’s ability to store massive amounts of data and to quickly access this data (or any AI models built using the data) bring unprecedented potential to the space, and we’re busy making this reality.

Final Takeaways

In conclusion, 0G DA provides an infinitely scalable DA solution that is built on top of 0G Storage and can have data quickly verified by 0G’s consensus network 0G Consensus.

While a lot of information, it can be summarized as:

- 0G DA is connected to 0G Storage. It can prove data availability for 0G Storage by randomly selecting a quorum of 0G Storage Nodes to attest to data, using an “honest majority” assumption for security.

- 0G Consensus verifies that Storage Nodes are truthful, and 0G Consensus can be infinitely scaled by adding new networks managed by the same validators in a process termed “shared staking”.

- This is the most scalable solution in the market and can be used by a wide range of projects, including L1s/L2s, bridges, rollups-as-a-service, data markets, DeFi, and much more.

This is an exciting time to be in Web3 but there is still much to do.

To learn more and join our newsletter, visit https://0g.ai/ or follow us on Twitter.